The OpenGL Pipeline Newsletter - Volume 004

Transforming OpenGL Debugging to a “White Box” Model

The OpenGL API is designed to maximize graphics performance. It is not designed for ease of debugging. When a developer works on top of OpenGL, he sees the graphics system as a "black box;" the program issues thousands of API calls into it and "magically" an image comes out of the system. But, what happens when something goes wrong? How does the developer locate the OpenGL calls that caused the problem?

In this article we will demonstrate how gDEBugger transforms OpenGL application debugging tasks from a black box model to a white box model, letting the developer peer into OpenGL to see how individual OpenGL commands affect the graphics system.

State variable related problems

An OpenGL render context is a huge state variable container. These state variables, located inside the graphics system, are treated as "global variables" that are repeatedly queried and changed by numerous OpenGL API functions and mechanisms. However, when using a general purpose debugger, a developer cannot view state variable values, cannot put data breakpoints on state variables, and, at least in Microsoft Visual Studio®, cannot put breakpoints on OpenGL API functions that serve as their high-level access functions. This black box model makes it hard to locate state variable related problems.

Using gDEBugger's OpenGL State Variables view, a developer can select OpenGL state variables and watch their values interactively.

For example, if a program renders an object, but it does not appear in the rendered image, the developer can break the debugged application run when the relevant object is being rendered and watch the related OpenGL state variable values (GL_MODELVIEW_MATRIX, GL_PROJECTION_MATRIX, GL_VIEWPORT, etc.). After locating the state variable values that appear to cause the problem, the developer can put API breakpoints on their access functions (glRotatef, glTranslatef, glMultMatrixf, etc.) and use the Call Stack and Source Code views to locate the scenario that led to the wrong state variable value assignment.

Some OpenGL mechanisms use more than just a few OpenGL state variables. For debugging these mechanisms, gDEBugger offers a State Variables Comparison Viewer. This viewer allows a developer to compare the current state variable values to either:

- The OpenGL default state variable values.

- The previous debugger suspension values.

- A stored state variable value snapshot.

For example, if a game has a mode in which a certain character's shading looks fine, and another mode in which the character's shading looks wrong, the developer can:

- Break the game application run when the character is rendered fine.

- Export all state variables and their values into a "state variable snapshot" file.

- Break the application run again when the character is rendered incorrectly.

- gDEBugger's Comparison Viewer will automatically compare the OpenGL's state variable values to the exported state variable snapshot file values.

If, for example, the game does not have a mode in which the character is rendered fine, the developer can:

- Break the game application run when the character is being rendered.

- gDEBugger's Comparison Viewer will automatically compare the OpenGL's state variable values to the default OpenGL values.

Displaying only the state variable values that were changed by the game application helps the developer track the cause of the problem.

Breaking the debugged application run

In the previous section, we asked the developer to "Break the game application run when the character is being rendered." This allows the developer to view state variable values, texture data, etc. when a certain object is being rendered. gDEBugger offers a few mechanisms to do that:

- API function breakpoints: The Breakpoint dialog lets a developer choose OpenGL / ES, WGL, GLX, EGL and extension functions breakpoints.

- The Draw Step command allows a developer to advance the debugged application process to the next OpenGL function call that has"visible impact" on the rendered image.

- The Interactive Mode Toolbar enables viewing of the graphics scene as it is being rendered, in full speed or in slow motion mode. This is done by forcing OpenGL to draw into the front color buffer, flushing the graphics pipeline after each OpenGL API function call and adding the desired slow motion delay.

Texture related problems

gDEBugger's Textures Viewer allows viewing a rendering contexts' texture objects, their parameters and the texture's loaded data as an image. Bound textures and active textures (those whose bind targets are enabled) are marked. This helps the developer to pinpoint texture related problems quickly and easily.

Program and shader related problems

gDEBugger's Shaders Source Code Editor displays a list of programs and shaders allocated in each rendering context. The editor view displays a shader's source code and parameters, a program's parameters, a program's attached shaders, and its active uniform values. The editor also allows editing shader source code, recompiling shaders, and linking and validating programs "on the fly." These powerful features save development time required for developing and debugging GLSL program and shader related problems.

We hope this article demonstrated how gDEBugger transforms the OpenGL debugging task to a white box model, minimizing the time required for finding those "hard to catch" OpenGL-related bugs and improving your program's quality and robustness.

Yaki Tebeka, Graphic Remedy

CTO & Cofounder

Editor's Note: You'll remember from our first edition that Graphic Remedy and the ARB have teamed up to make gDEBugger available free to non-commercial users for a limited time.

Another Object Lesson

The OpenGL Longs Peak object model is substantially defined now, and we have a good notion of what a Longs Peak program will look like at a high level. Many smaller details are still being filled in, but after reading this article you should understand Longs Peak in considerable detail. For a background refresher, refer to "The New Object Model" in OpenGL Pipeline Volume 002, and "Using the Longs Peak Object Model" in OpenGL Pipeline Volume 003.

What's In A Namespace? Or, a GL by any other prefix would smell as sweet.

An important decision is that the OpenGL Longs Peak API will exist in a new namespace. Originally we thought Longs Peak could continue to use "gl" prefixed functions, "GL" prefixed types, and "GL_" prefixed tokens, but as we wrote up object specifications, we realized there were too many collisions. For example, both OpenGL 2.1 and Longs Peak have a Map Buffer entry point, but they take different parameters. We haven't chosen the namespace prefix yet; it's a marketing and branding issue, not a technical issue. As a placeholder until that's decided, we're using "lp" as the prefix.

The Object Hierarchy

The objects defined in Longs Peak fall into several different categories depending on their behavior and semantics. In a true object-oriented language binding of the API, these categories would be abstract classes from which the concrete classes inherit behavior. Since our C API doesn't support inheritance, the categories are useful primarily as a conceptual tool for understanding the API. In any event, the categories are as follows:

- Templates are client state, meaning they exist in the client(application) address space.All the other categories are server state, existing in the Longs Peak driver address space. Templates are fully mutable,meaning that any of their properties can be changed at any time; this makes it easier to reuse them for generating multiple objects. Templates, and the APIs to create and use them, are described more fully in OpenGL Pipeline 003.

- State Objects contain a group of closely related attributes defining the behavior of some part of the graphics pipeline. They are fully immutable once created, which allows the driver to pre-cache derived state and otherwise optimize use of these objects, and they may be shared by multiple contexts.State objects are typically small. State object classes described below include format objects, shader objects, and texture filter objects.

- Data Objects have an immutable structure (organization) defined when they are created, and a fully mutable data store filling out that structure. They may be shared by multiple contexts, although there are some remaining issues regarding when changes made in one context to the data store of an object will be visible to another context using the same object. Data object classes described below include buffer objects, image objects, and several types of sync objects (fences and queries).

- Container Objects have one or more mutable attachments, which are references to other data, state, or container objects. They also have immutable attachment properties, which describe how to interpret their attachments. Container objects may not be shared by multiple contexts, mostly because the side effects of changing their attachments may be costly.For example, changing a shader attachment of a program object in use by another context could invalidate the state of that context at worst, and force time-consuming and unexpected relinking and validation at best. Container object classes described below include frame buffer objects, program objects, and vertex array objects.

Concrete Object Descriptions

Each of the concrete object classes mentioned above is explained in somewhat more detail here. The descriptions are organized according to the dependencies of the object graph, to avoid backwards references.

Format Objects fully resolve data formats that will be used in creating other types of objects. Such an object's defined usage must either match or be a subset of the usage supported by its format object. Format objects are a powerful generalization of the internal format parameter used in specifying texture and pixel images in OpenGL 2.1. In addition to the raw data format, format objects include:

- intended usage: pixel, texture, and/or sample image, and which texture dimensionalities (1D, 2D, 3D, cube map, and array), vertex, and/or uniform buffer

- minimum and maximum allowed texture or pixel image size

- mipmap pyramid depth and array size

- and whether data can be mipmapped, can be mapped to client address space, or is shareable.

Buffer Objects replace vertex arrays and pixel buffers, texture images, and render buffers from OpenGL 2.1. There are two types of buffer objects. Unformatted buffers are used to contain vertex data (whose format and interpretation may change depending on the vertex buffer object they're bound to) or uniform blocks used by shaders. Images are formatted buffers with a size, shape (dimensionality), and format object attachment. Changing buffer contents is done with APIs to load data (lpBufferData and lpImageData[123]D) and to map buffers in and out of client memory with several options allowing considerable flexibility in usage. See the article "Longs Peak Update: Buffer Object Improvements" earlier in this issue for more details.

Texture Filter Objects replace the state set with glTexParameter in OpenGL 2.1 controlling how sampling of textures is performed, such as minification and magnification filters, wrap modes, LOD clamps and biases, border colors, and so on. In Longs Peak, texture images and texture filters have been completely decoupled; a texture filter can be used with many different image objects, and an image can be used with many different texture filter objects.

Shader Objects are a (typically compiled) representation of part or all of a shader program, defined using a program string. A shader object may represent part or all of a stage, such as vertex or fragment, of the graphics pipeline.

Program Objects are container objects which link together one or more shader objects and associate them with a set of images, texture filters, and uniform buffers to fully define one or more stages in the programmable graphics pipeline. There is no incremental relinking; if a shader needs to be changed, simply create a new program object.

Frame buffer Objects are containers which combine one or more images to represent a complete rendering target. Like FBOs in OpenGL 2.1, they contain multiple color attachments, as well as depth and stencil attachments. When image objects are attached to an FBO, a single 2D image must be selected for attachment. For example, a 3D mipmap could have a particular mipmap level and Z offset slice selected, and the resulting 2D image attached as a color attachment. Similarly, a specific cubemap face could be selected and attached as a combined depth/stencil attachment. Each attachment point has an associated format object for determining image compatibility. When an image is bound to an FBO attachment, the format object used to create the image and the format object associated with the attachment point must be the same format object or validation fails. This somewhat draconian constraint greatly simplifies and speeds validation.

Vertex Array Objects are containers which encapsulate a complete set of vertex buffers together with the interpretation (stride, type, etc.) placed on each of those buffers. Geometry is represented in Longs Peak with VAOs, and unlike OpenGL 2.1, VAOs are entirely server state. That means no separate client arrays or enables! It also becomes very efficient to switch sets of vertex buffers in and out, since only a single VAO need be bound -- in contrast to the many independent arrays, and their interpretation, that have to be set in OpenGL 2.1 when switching VAOs. (The vendor extension GL_APPLE_vertex_array_object provides similar efficiency today, but is only available in Apple's implementation of OpenGL.)

Sync Objects are semaphores which may be set, polled, or waited upon by the client, and are used to coordinate operations between the Longs Peak server and all of the application threads associated with Longs Peak contexts in the same share group. Two subclasses of sync objects exist to date. Fence Syncs associate their semaphore with completion of a particular command (set with lpFence) by the graphics hardware, and are used to indicate completion of rendering to a texture, completion of object creation, and other such events. Query Syncs start a region with lpBeginQuery, and keep count of fragments rendered within that region. After lpEndQuery is called to end the query region, the semaphore is signaled once the final fragment count is available within the query object. In the future we will probably define other types of syncs associated with specific hardware events -- an example would be a sync associated with monitor vertical retrace -- as well as ways to convert syncs into equivalent platform-specific synchronization primitives, such as Windows events or pthreads semaphores.

The remaining objects making up Longs Peak are still being precisely defined. They are likely to include: display list objects, which capture the vertex data resulting from a draw call for later reuse; per-sample operation objects, which capture the remaining fixed-functionality state used for scissor test, stencil test, depth test, blending, and so on; and perhaps a "miscellaneous state" object containing remaining bits of state that don't have an obvious better home, such as edge flag enables, point and line smooth enables, polygon offset parameters, and point size.

Context is Important

-- with apologies to J.R.R. Tolkien

Just as in OpenGL 2.1, the Longs Peak graphics context encapsulates the current state of the graphics pipeline. Unlike OpenGL 2.1, most context state is encapsulated in attributes of server objects. A small number of objects are required to define the pipeline state. These objects are bound to the context (see figure 1); changing a binding to refer to another object updates the graphics hardware state to be consistent with that object's attributes.

Changing state by binding objects can be very efficient compared to the OpenGL 2.1 model, since we are changing large groups of state in one operation, and much of that state may have already been pre-validated while constructing the object being bound. This approach will also be useful for applications and middleware layers performing complex state management. It is both more general and more powerful than either the glPushAttrib/glPopAttrib commands or encapsulating state changes in GL display lists, which are the only ways to change large groups of state in one operation today.

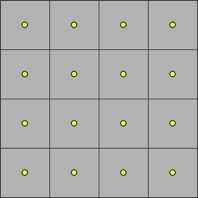

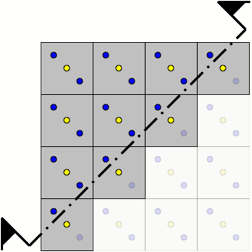

Figure 1: Graphics Context Bindings. The Longs Peak context contains bindings for geometry (a vertex array object), programs (a program object), a rendering target (framebuffer object), sample operations state, and remaining fixed-functionality state affecting rasterization, hints and other miscellaneous state. In this diagram, yellow objects are containers, green objects are state objects, blue objects are data objects, red blocks represent attributes of container and state objects, and arrows represent attachments to objects or bindings to the context. The context itself, while not strictly speaking an object, is shown in yellow-red to indicate that it takes on aspects of a container object.

View Closeup

Drawing Conclusions

Once all required objects are bound to the context, we can draw geometry. The drawing call looks very much like the OpenGL 2.1 glDrawArrays, but combines multiple draw array and primitive instancing parameters into a single call:

void lpDrawArrays(LPenum mode, LPint *first,

LPint *count, LPsizei primCount,

LPsizei instanceCount)

mode is the primitive type, just as in OpenGL 2.1. first and count define the range of indices to draw. primCount ranges are specified, so count[0] vertices starting at index first[0] will be drawn from the currently bound vertex array object and passed to the vertex program. Then count[1] vertices starting at index first[1], ending with count[primCount-1] vertices starting at index first[primCount-1]. Finally, instanceCount is used for geometry instancing; the entire set of ranges will be drawn instanceCount times, each time specifying an instance ID available to the vertex shader, starting at 0 and ending at instanceCount-1.

A similar variation of glDrawElements is also provided:

void lpDrawElements(LPenum mode, LPsizei *count,

LPsizeiptr *indices,

LPsizei primCount,

LPsizei instanceCount)

The drawing calls are among the small number of Longs Peak entry points that do not take an object as an argument, since all the objects they use are already bound to the graphics context.

Outline for Success

-- Charles Barkley

Finally, we've reached the point of outlining a Longs Peak sample program. The outline is not intended to be detailed source code, just to give a sense of the steps that will need to be taken to fully define the objects required for rendering. While this initialization looks complex, most of it is simple "boilerplate" code that can readily be encapsulated in utility libraries or middleware such as GLUT. It is also likely that at least some of the required objects can be predefined by the driver; for example, if the application is rendering to a window-system provided drawable, then a "default framebuffer object" will be provided.

// Create a framebuffer object to render to

// This is the fully general form for offscreen

// rendering, but there will be a way to bind a window-

// system provided drawable as a framebuffer object, or

// as the color image of an FBO, as well.

LPformat cformat, dformat, sformat = { create format

objects for color, depth, and stencil buffers

respectively }

LPframebuffer fbo = { create a framebuffer object,

specifying cformat, dformat, and sformat as the

required formats of color buffer 0, the depth buffer,

and the stencil buffer respectively }

LPbuffer cimage, dimage, simage = { create image

objects, specifying cformat, dformat, and sformat as

the formats of the color image, depth image, and

stencil image respectively }

Attach cimage, dimage, and simage to fbo at its color

buffer 0, depth buffer, and stencil buffer attachment

points respectively

// Create a program object to render with

LPshader vertshader, fragshader = { create shader

objects for the vertex and fragment shader stages,

specifying the shader program text for each stage as

an attribute of the respective shader object}

LPprogram program = { create program object,

specifying vertshader and fragshader as attributes of

the program object}

LPbuffer vertbuffer, fragbuffer = { create unformatted

buffer objects for the uniform storage used by the

vertex and fragment shaders, respectively }

Attach vertbuffer and fragbuffer to program as the

backing store for the uniform partitions of the vertex

and fragment shaders, respectively

// Create vertex attribute arrays to render with

LPbuffer attribs = { create an unformatted buffer

object containing all the attribute data required by

the bound programs }

LPvertexArray vao = { create a vertex array object

with specified size/type/stride/offset attributes for

each required attribute array }

Attach attribs to vao at each attachment point for a

required attributes

// Create miscellaneous required state objects

LPsampleops sampleops = { create sample operations

object with specified fixed-function depth test,

stencil test, blending, etc. attributes }

LPmiscstate misc = { create "miscellaneous state"

object with specified rasterization settings, hints,

etc. }

// Bind everything to the context

lpBindVertexArray(vao);

lpBindProgram(program);

lpBindFramebuffer(fbo);

lpBindSampleops(sampleops);

lpBindMiscState(misc);

// Finally, all required objects are defined and we

// can draw a single triangle (or lots of them)

LPint first = 0, count = 3;

lpDrawArrays(LP_TRIANGLES, &first, &count, 1, 1);

While we still have a lot of work to do, and the final details may differ slightly, the ARB has now defined the overall structure of the Longs Peak API and the organization and definition of the object classes in the API. We'll continue to show you details of Longs Peak in future issues of OpenGL Pipeline, and when Longs Peak is released, we’ll expand these articles into a tutorial and sample code in the ARB's online SDK.

Jon Leech

OpenGL Spec Editor / ARB Ecosystem TSG Chair

(Subtitles in this article are thanks to the late-night availability of Google and www.brainyquote.com)

Longs Peak Update: Buffer Object Improvements

Longs Peak offers a number of enhancements to the buffer object API to help streamline application execution. Applications that are able to leverage these new features may derive a considerable performance benefit. In particular they can boost the performance of applications that have a lot of dynamic data flow in the form of write-once/draw-once streamed batches, procedurally generated geometry, or frequent intra-frame edits to buffer object contents.

Under OpenGL 2.1, there are two ways to transfer data from the application to a buffer object: the glBufferData/glBufferSubData calls, and the glMapBuffer/glUnmapBuffer calls. The latter themselves do not transfer any data but instead allow the application temporary access to read and write the contents of a buffer object directly. The Longs Peak enhancements described here are focused on the latter style of usage.

The behavior of glMapBuffer is not very complicated under OpenGL 2.1: it will wait until all pending drawing activity using the buffer in question has completed, and it will then return a pointer representing the beginning of the buffer, implicitly granting access to the entire buffer. Once the application has finished reading or writing data in the buffer, glUnmapBuffer must be called to return control of the storage to GL. This model is straightforward and easy to code to, but can hold back performance during some usage patterns. The usage patterns of interest are strongly centered on write-only traffic from the application, and the enhancements to the Longs Peak API reflect that.

Longs Peak will allow the application to exercise tighter control over the behavior of glMapBuffer (tentatively referred to as lpMapBuffer), by offering these new requests:

- mapping only a specified range of a buffer

- strict write-only access

- explicit flushing of altered/written regions

- whole-buffer invalidation

- partial-buffer invalidation

- non-serialized access

An application may benefit from using some or all of the above techniques. They're listed above in roughly increasing order of challenge for the developer to utilize correctly; getting the maximum performance may take more developer work and testing, depending on how application code is structured. Let's look at each of the options in more detail. Each is exposed via an individual bit flag in the access parameter to the lpMapBuffer call.

Sub-range mapping of a buffer: Under OpenGL 2.1 it was not possible to request access to a limited section of a buffer object; mapping was an “all or nothing” operation. One side effect of this is that GL has no way to know how much data was changed before unmapping, whether it involves a single range of data or potentially multiple ranges of data. In Longs Peak, by explicitly mapping sub-ranges of a buffer, the application can provide useful information to help accelerate the delivery of those edits to the buffer contents.

For example, if the application maintains a multi-megabyte vertex buffer and wishes to change a few kilobytes of localized data, it can map just the area of interest, write any changes to it, and then unmap. On implementations where altered data ranges must be copied or mirrored to GPU storage, the work at unmap time is thereby reduced significantly.

While in some cases an application may be able to achieve the same partial edit to a large buffer by using glBufferSubData, that technique assumes the original data exists in a readily copyable form. This enhancement to the lpMapBuffer path allows more efficient partial edits to a buffer object even when the CPU is sourcing the data directly via some algorithm, such as a decompression technique or procedural animation system (particles, physics, etc.). The application can map the range of interest, use the pointer as the target address for the code actually writing the finished data, and then unmap.

Write-only access: While a request of write-only access was possible in GL2, reading from those mappings was discouraged in the spec as likely to be slow or capable of causing a crash. Under Longs Peak this is even more strongly forbidden; reading from a write-only mapping may either crash or return garbage data even if the read succeeds. If there is any need to read from a mapped buffer in a Longs Peak program, you absolutely must request read access in the access parameter to lpMapBuffer.

By defining this behavior more strictly we can enhance the notion of one-way data flow from CPU to memory to GPU and free up the driver to do some interesting optimizations, the net effect being that lpMapBuffer can return more quickly with a usable pointer for writing when needed. Write-only access is especially powerful in conjunction with one or more of the options described below.

Explicit flushing: In some use cases it can be beneficial for the application to map a range of a buffer representing the “worst case” size needs for the next drawing operation, then write some number of vertices up to that amount, and then unmap. Normally this would imply to GL that all of the data in the mapped range had been changed. But by requesting explicit flushing, the application can undertake the responsibility of informing GL which regions were actually written. Use of this option requires the application to track precisely which bytes it has written to, and to tell GL where those bytes are prior to unmap through use of the lpFlushMappedData API.

For some types of client code where vertices are being generated procedurally, it can be difficult to predict the number of vertices generated precisely in advance. With explicit flush, the application can “reserve” a worst-case-sized region at map time, and then “commit” the portion actually generated through the lpFlushMappedData call, prior to unmap.

This ability to convey precisely how much data was written (and where) has a number of positive implications for the driver with respect to any temporary memory management it may need to do in response to the request. While an application can and should use the map-time range information to constrain the amount of storage being manipulated, explicit flushing allows for additional control if that amount cannot be precisely predicted at map time.

This is another case where the same net effect could be accomplished by using a separate temp buffer for the initial data generation, followed by a call to glBufferSubData. However, being able to write the finished data directly into the mapped region can eliminate a copying step for the application and also potentially reduce processor cache pollution depending on the implementation.

Whole-buffer invalidation: This is analogous to the glBufferData(NULL) idiom from OpenGL 2.1, whereby a new block of uninitialized storage is atomically swapped into the buffer object, but the old storage is detached for the driver to release at a later time after pending drawing operations have completed -- also known as “buffer orphaning.” Since Longs Peak no longer allows the glBufferData(NULL) idiom, this functionality is now provided as an option to the lpMapBuffer call. This is especially useful for implementing efficient streaming of variable sized batches; an application can set up a fixed size buffer object, then repeatedly fill and draw at ascending offsets -- packing as many batches as possible into the buffer -- then perform a full buffer invalidation and start over at offset zero.

Partial-buffer invalidation: This option can and should be invoked when the application knows that none of the data currently stored within the mapped range of a buffer needs to be preserved. That is, the application’s intent is to overwrite all or part of that range, and only the newly written data is expected to have any validity upon completion. This option is only usable in conjunction with write-only access mode. It has a number of positive implications for performance, as it releases the driver from the requirement of providing any valid view of the existing storage at map time. Instead it is free to provide scratch memory in order to return a usable pointer to the application more quickly.

Generally speaking, a program can and should make use of both partial and whole buffer invalidation, but the usage frequency of the former is expected to be much higher. Restated, partial invalidation is useful for efficiently accumulating individual batches of CPU-sourced data into a common buffer, whereas whole buffer invalidation should be invoked when one buffer fills up and a fresh batch of storage is needed. Whole buffer invalidation, like glBufferData(NULL) in OpenGL 2.1, enables the application to perform these hand-offs without any need for sync objects, fences, or blocking.

Non-serialized access: This option allows an application to assume complete responsibility for scheduling buffer accesses. When this option is engaged, lpMapBuffer may not block if there is pending drawing activity on the buffer of interest. Access may be granted without consideration for any such concurrent activity. Another term for this behavior is "non-blocking mapping." If you have written code for OpenGL 2.1 and run into stalls in glMapBuffer, this option may be of interest.

When used in conjunction with write-only access and partial invalidation, this option can enable the application to efficiently accumulate any number of edits to a common buffer interleaved with draw calls using those regions, keeping the drawing thread largely unblocked and effectively decoupling CPU progress from GPU progress. On contemporary multi-core-aware implementations where multiple frames' worth of drawing commands may be enqueued at any given moment, the impact of being able to interleave mapped buffer access with drawing requests (without blocking the application) can be quite significant.

An application can only safely use this option if it has taken the necessary steps to ensure that regions of the buffer being used by drawing operations are not altered by the application before those operations complete. This can be accomplished using proper use of sync objects, or by enforcing a write-once policy per region of the buffer. A developer must not set this bit and expect everything to keep working as-is; careful thought must go into analysis of existing access/drawing patterns before proceeding with the use of this technique. The caution level on the part of the developer must be very high, but the potential rewards are also significant.

As the Longs Peak spec is still evolving and minor naming or API changes may yet be made, some of the terminology above could change before the final spec is drafted and released. This article is intended to offer a “sneak peek” at the types of improvements under consideration. Please share your questions and feedback with us on the OpenGL forums.

T. Hunter

Object Model Technical SubGroup Contributor

Shaders Go Mobile: Announcing OpenGL ES 2.0

Shaders Go Mobile: Announcing OpenGL ES 2.0

It’s here at last! At the Game Developers Conference in March, the OpenGL ES Working Group announced the release of OpenGL ES 2.0, the newest version of OpenGL for mobile devices. OpenGL ES 2.0 brings shader-based rendering to cell phones, set-top boxes, and other embedded platforms. The new specification has been three years in the making – work actually started before the release of our last major release, OpenGL ES 1.1. What took so long? When we created the ES 1.x specifications, we were using mature technology, following paths that the OpenGL ARB had thoroughly explored in older versions of the desktop API. With OpenGL ES 2.0, we moved closer to the cutting edge, so we had less experience to guide us. But the work is done now. We’re very pleased with what we came up with, and excited to have the specification released and silicon on the way. We think you’ll agree that it was worth the wait.

A Lean, Mean, Shadin’ Machine…

Like its predecessors, OpenGL ES 2.0 is based on a version of desktop OpenGL – in this case, OpenGL 2.0. That means, of course, that it supports vertex and fragment shaders written in a high-level programming language. But almost as interesting as what ES 2.0 has, is what it doesn’t have. As I said in the OpenGL ES article in OpenGL Pipeline #3, one of the fundamental design principles of OpenGL ES is to avoid providing multiple ways of achieving the same goal. In OpenGL 2.0 on the desktop, you can do your vertex and fragment processing in shaders or you can use traditional fixed-functionality transformation, lighting, and texturing controlled by state-setting commands. You can even mix and match, using the fixed-functionality vertex pipeline with a fragment shader, or vice versa. It’s powerful, flexible, and backward compatible; but isn’t it, perhaps, a little bit… redundant?

One of the first (and toughest) decisions we made for OpenGL ES 2.0 was to break backward compatibility with ES 1.0 and 1.1. We decided to interpret the “avoid redundancy” rule to mean that anything that can be done in a shader should be removed from the fixed-functionality pipeline. That means that transformation, lighting, texturing, and fog calculation have been removed from the API. We even removed alpha test, since you can perform it in a fragment shader using discard. Depth test, stencil test, and blending are still there, because you can’t perform them in a shader; even if you could read the frame buffer, these operations must be executed per sample, whereas fragment shaders work on fragments.

Living without the fixed-functionality pipeline may seem a little scary, but the advantages are enormous. The API becomes very simple and easy to learn – a handful of state-setting calls, plus a few functions to load and compile shaders. At the same time, the driver gets a lot smaller. An OpenGL 2.0 driver has to do a lot of work to let you switch back and forth smoothly between fixed-functionality and programmable mode, access fixed-functionality state inside your shaders, and so on. Since OpenGL ES 2.0 has no fixed-functionality mode, all of that complexity goes away.

…with Leather Seats, AC, and Cruise Control

OpenGL ES 2.0 lacks the fixed-functionality capability of OpenGL ES 1.x, but don’t get the impression that it is a stripped-down, bare-bones API. Along with the shader capability, we’ve added many other new features that weren’t available in ES 1.0 or 1.1. Among them are:

More Complex Vertices

ES 2.0 vertex shaders can declare at least eight general-purpose vec4 attributes, versus the five dedicated vertex arrays of ES 1.1 (position, normal, color, texcoord0, texcoord1). On the output side, the vertex shader can send at least eight vec4 varyings to the fragment shader.Texture Features Galore

OpenGL ES 2.0 implementations are guaranteed to provide at least eight texture units, up from two in ES 1.1. Dependent texturing is supported, as are non-power-of-two texture sizes (with certain limitations). Cube map textures are added as well, because what fun would fragment shaders be without support for environment mapping, global illumination maps, directional lookup tables, and other cool hacks?Stencil Buffer

All ES 2.0 implementations provide at least one configuration with simultaneous support for stencil and depth buffers.Frame Buffer Objects

OpenGL ES 2.0 supports a version of the EXT_framebuffer_object extension as a mandatory core feature. This provides (among other things) an elegant way to achieve render-to-texture capabilities.Blending

OpenGL ES 2.0 extends the options available in the fixed-functionality blending unit, adding support for most of BlendEquation and BlendEquationSeparate.Options

Along with the ES 2.0 specification, the working group defined a set of options and extensions that are intended to work well with the API. These include ETC1 texture compression (contributed by Ericsson), 3D textures, NPOT mip-maps, and more.

The Shader Language

OpenGL ES 2.0 shaders are written in GLSL ES, a high-level shading language. GLSL ES is very similar to desktop GLSL, and it is possible (with some care, and a few well-placed #ifdefs) to write shader code that will compile under either. We’ll go over the differences in detail in a future issue of OpenGL Pipeline, and talk about how to write portable code.

Learning More

The ES 2.0 and GLSL ES 1.0 specifications are available for download at http://www.khronos.org/registry/gles/. The API document is a ‘difference specification’, and should be read in parallel with the desktop OpenGL 2.0 specification, available at http://www.opengl.org/documentation/specs/. The shading language specification is a stand-alone document.

Take it for a test drive

OpenGL ES 2.0 silicon for mobile devices won’t be available for a while yet, but you can get a development environment and example programs at http://www.imgtec.com/PowerVR/insider/toolsSDKs/KhronosOpenGLES2xSGX/. This package runs on the desktop under Windows or Linux, using an OpenGL 2.0 capable graphics card to render ES 2.0 content. Other desktop SDKs may well be available by the time you read this, so keep an eye on the Khronos home page and the resource list at http://www.khronos.org/developers/resources/opengles/. If you just want to experiment with the shading language, AMD has announced that GLSL ES will be supported in RenderMonkey 1.7, coming soon.

Tom Olson, Texas Instruments, Inc.

OpenGL ES Working Group Chair

Climbing OpenGL Longs Peak, Camp 3: An OpenGL ARB Progress Update

Longs Peak – 14,255 feet, 15th highest mountain in Colorado. Mount Evans is the 14th highest mountain in Colorado. (Therefore, we have at least 13 OpenGL revisions to go!)

Longs Peak – 14,255 feet, 15th highest mountain in Colorado. Mount Evans is the 14th highest mountain in Colorado. (Therefore, we have at least 13 OpenGL revisions to go!)

Since the last edition of OpenGL Pipeline we’ve increased our efforts even more. We held a face-to-face meeting in March and another face-to-face meeting at the end of May. Currently we’re on track to meet face-to-face six times this year, instead of the usual four! The ARB recognizes it is extremely important to get OpenGL Longs Peak and Mount Evans done. We also still meet by phone five times per week. This is a big commitment from our members, and I’m very happy and proud to see the graphics industry working together to make OpenGL the best graphics platform!

A lot has happened since the last edition of Pipeline. Below follows a brief summary of the most important advances. Other articles in this edition will go into more detail on some of the topics. Happy reading!

Maximize vertex throughput using buffer objects. Just like in OpenGL 2.1, an application can map a buffer object in OpenGL Longs Peak. Mapping a buffer object returns a pointer to the application which can be used to write (or read) data to (or from) the buffer object. In OpenGL Longs Peak the mapping is made more sophisticated, with the end result that maximum parallelism can be achieved between the application writing data into the buffer object and the GL implementation reading data out of it. Read more about this cool feature in an article later in this newsletter.

More context creation options are available. In the previous edition of OpenGL Pipeline I described how we are planning on handling interoperability of OpenGL 2.1 and Longs Peak code. As a result, the application needs to explicitly create an OpenGL Longs Peak or OpenGL 2.x context. To aid debugging, it is also possible to request the GL to create a debug context. A debug context is only intended for use during application development. It provides additional validation, logging and error checking, but possibly at the cost of performance.

The object handle model is fleshed out. We finalized all the nitty-gritty details of the object model that have to do with object and handle creation and deletion, attachment of an object to a container object, and the behavior of these operations across contexts. Here is a brief summary:

- The GL creates handles, not the application, as can be the case in OpenGL 2.1. This is done in the name of efficiency.

- Object creation can be asynchronous. This means that it is possible that the creation of an object happens later in time than the creation of the handle to the object. A call to an object creation routine will return the handle to the caller immediately. The GL server might not get to the creation of the actual object until later. This is again done for performance reasons. The rule that all commands are executed in the order issued still applies (within a given context). Thus, asynchronous object creation might mean that a later request to operate on an object will have to block until the object is created. Fences and queries can help determine if this will be the case.

- Object use by the GL is reference counted. Once the “refcount” of an object goes to zero, the GL implementation is free to delete the storage of the object. Object creation sets the refcount to 1.

- The application does not delete an object, but instead invalidates the object handle. The invalidation decrements the object’s refcount.

- An object’s refcount is incremented whenever it is “in use.” Examples of “in use” include attaching an object to a container object, or binding an object into the context.

- Once a handle is invalidated, it cannot be used to refer to its underlying object anymore, even if the object still exists.

Most context state will be moved into an object. We are currently pondering which state stays in the context, and which context state is moved into an object. One interesting set of state I want to highlight is the state for the per-fragment operations, described in Chapter 4 of the OpenGL 2.1 specification. This state actually applies per sample, not per fragment. Think of state such as alpha test, stencil test, depth test, etc. We expect that some time in the future hardware will be available that makes all these operations programmable. Once that happens, we’ll define another program object type, and would like to be able to just “drop it in” to the framework defined in OpenGL Longs Peak. Therefore, we are working on defining a sample operation state object that contains all this state.

We’re also working on fleshing out the draw commands as well as display lists. Good progress was made defining what the draw calls will look like. We decided to keep it simple, and largely mirror what is done in OpenGL 2.1. There will be DrawArrays, DrawElements, etc. commands that take vertex indices. In order to actually render, at least a program object, a vertex array object, and an FBO need to be bound to the context. Possibly a sample operation state object, as describe above, will also need to be bound.

You can meet the designers behind OpenGL Longs Peak and Mount Evans at Siggraph 2007 in August. The traditional OpenGL BOF (Birds of a Feather) will likely be on Wednesday evening, August 8th, from 6:00pm – 8:00pm. I hope to see you there!

In the remainder of this issue you’ll find an update from the OpenGL ES Working Group, a discussion of Longs Peak buffer object improvements, a look at the Longs Peak object model with source code samples, and an article showing how to use gDEBugger as a window exposing what’s happening within the GL.

Barthold Lichtenbelt, NVIDIA

Khronos OpenGL ARB Steering Group chair

Windows Vista and OpenGL-the Facts

April 20, 2007

The Khronos OpenGL ARB Working Group has received requests for clarification concerning the performance of OpenGL on Windows Vista. These questions are understandable as Microsoft has dramatically changed the user experience with the Windows Aero compositing desktop and introduced a completely new display driver architecture. This article will discuss how these changes affect OpenGL.

The industry now has a growing body of real-world experience in shipping OpenGL on Windows Vista and the OpenGL ARB wishes to reinforce the positive synergy between OpenGL graphics and Windows Vista in three key areas

- Windows Vista fully supports hardware accelerated OpenGL;

- OpenGL applications can benefit from Window Vista•s improved graphics resource management;

- OpenGL performance on Windows Vista is extremely competitive with the performance on Windows XP.

Windows Vista Fully Supports OpenGL

Hardware-accelerated OpenGL is fully supported on Windows Vista with the Windows Aero compositing desktop user experience - just as with Direct3D.

OpenGL hardware acceleration is handled in exactly the same way in Windows XP and Windows Vista - through an Installable Client Driver (ICD) provided by graphics card manufacturers. Without an OpenGL ICD installed, Windows XP and Windows Vista both revert to rendering OpenGL in software on the CPU rather than using GPU acceleration.

Figure 1 shows the path through which OpenGL and Direct3D applications render their output under Windows Vista. Firstly, the OpenGL or Direct3D user-mode graphics drivers take the data from the application and use the graphics hardware to render one frame of content. That frame is then presented to the Desktop Window Manager (DWM) which composites the frame into the desktop using GPU acceleration. The DWM provides the desktop compositing functionality used by the Windows Aero and Windows Standard user experiences in Windows Vista.

The DWM is a key element of the Windows Vista user experience and one of its main functions is to manage the presentation of the Windows desktop by compositing the outputs of multiple applications to the screen. As a complete 3D application in its own right, the DWM uses GPU memory and resources and places an additional load on the GPU, which impacts application graphics performance by approximately 10% for typical applications. End users should weigh the benefits of the DWM and the Windows Aero user interface against this performance cost. An easy way to experiment with a particular application is to disable the DWM, by selecting the Windows Classic or Windows Basic user interface style.

During full-screen applications, such as games, the DWM is not performing window compositing and therefore performance will not appreciably decrease.

Figure 1 - OpenGL, Direct3D and the Desktop Window Manager (DWM)

OpenGL Benefits from Improved Resource Management

The new Windows Display Driver Model (WDDM) in Windows Vista provides a firewall and the thunk layer (that handles communication between the graphics hardware/kernel mode graphics driver and the user-mode graphics driver) to increase overall system stability. This architecture differs from Windows XP, where the OpenGL ICD talked directly to the GPU hardware and handled many low-level functions, including memory management.

WDDM affords some implicit benefits for OpenGL applications. For example, graphics resources previously managed by the OpenGL ICD, such as video memory, are now virtualized by the operating system. When multiple applications are running, the OpenGL ICD exposes maximum texture storage capacity to each application and Windows Vista takes care of resource allocation and scheduling, resulting in more efficient use of resources across applications.

OpenGL Performance is Competitive on Windows Vista

Some have suggested that OpenGL performance on Windows Vista is poor compared to Windows XP. This is not the case.

A comparison between the performance on Windows XP and Windows Vista of the SPECviewperf professional OpenGL benchmark, two OpenGL game benchmarks, and two Direct3D game benchmarks are presented below.

Professional OpenGL Application Performance

The SPECviewperf figure below shows that the graphics performance of professional OpenGL applications on Windows Vista is very close to that on Windows XP. SPECviewperf runs in a window, and on Windows Vista the DWM was turned off, by selecting the Windows Classic color scheme, to achieve maximum graphics performance. These numbers are extremely impressive, given that the WDDM is a radical departure from the Display Driver model on Windows XP and the new Windows Vista drivers have not yet benefited from the years of tuning and optimization that the Windows XP OpenGL drivers have undergone. Software developers and graphics card manufacturers have had access to production-ready Windows Vista since November 2006, and graphics performance on Windows Vista will continue to improve over time as the drivers mature. As with any version of Microsoft Windows however, it is important to download the latest drivers from the graphics card vendor - particularly in the early lifetime of a new operating system.

If DWM were enabled through enabling Windows Aero - performance would drop by about 10%. For many users this tradeoff is worthwhile in order to use the more advanced user interface.

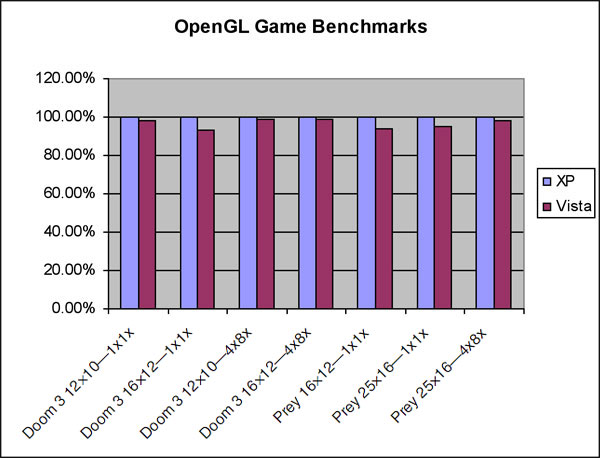

OpenGL Game Performance

The Doom3 (demo1) and Prey (move.demo) figures below show that Windows Vista performance for full-screen OpenGL games is comparable to the performance delivered on Windows XP. As these applications are full screen, DWM is not active and there is no performance drop in Windows Aero.

Direct3D Game Performance

The diagram below shows how the Direct3D games Half Life 2 Episode One (demo1) and Oblivion (Outdoor, HDR off) perform on Windows XP and Windows Vista. These numbers were obtained on the same system and same driver versions as the OpenGL benchmarks. In all cases the benchmarks were run in full-screen mode.

These figures show that Windows Vista performance for full-screen Direct3D games is comparable to the performance delivered on Windows XP • just as for OpenGL. As these applications are full screen, DWM is not active and there is no performance drop in Windows Aero.

These results confirm that both Direct3D and OpenGL are excellent 3D APIs for Windows Vista.

Conclusion

OpenGL is fully integrated into the Windows Vista display architecture just like Direct3D -both interfaces are first-class graphics API citizens. OpenGL hardware acceleration is available on Windows Vista through highly optimized drivers provided by the graphics hardware vendor just as on Windows XP. OpenGL applications can benefit from the improved resource management provided by Windows Vista. OpenGL performance on Windows Vista is extremely competitive compared to Windows XP and will rapidly improve as support for this new operating system matures. The enhanced Windows Aero user interface can decrease graphics intensive application performance by about 10% but this performance drop can be eliminated by selecting Windows Classic or Windows Basic user interface style or using full-screen applications. To ensure maximum performance and stability on Windows Vista, users should regularly check for driver upgrades from their graphics hardware supplier.

The third edition of the OpenGL Pipeline Newsletter, an OpenGL ARB publication, has included an article written by NVIDIA for software developers with tips to get the maximum out of their OpenGL application on Windows Vista. This article can be found here: http://www.opengl.org/pipeline/article/vol003_7/

For questions about this article, or about OpenGL in general, please contact Barthold Lichtenbelt at blichtenbelt@nvidia.com, Khronos OpenGL ARB Working Group chair.

References

General overview “What is Aero”

Overview of how DX9, DX10, GDI, OpenGL and the DWM are architected under the WDDM

OpenGL ARB article with tips for ISVs developing OpenGL applications on Windows Vista

Game performance results were obtained on an AMD Athlon 64 FX-62 2.8 GHz system with a NVIDIA GeForce 7900GTX graphics card, with driver version 97.73 for Windows XP, and 101.20 for Windows Vista, running in full-screen mode. The SPECviewperf results were obtained on a Dual Xeon 3.0 GHz system with a NVIDIA Quadro FX 5500 graphics card, with driver version 91.36 for Windows XP, and 100.72 for Windows Vista.

OpenGL is a registered trademark of Silicon Graphics Inc. SPECviewperf® is a registered trademark of the Standard Performance Evaluation Corporation, www.spec.org. All other product names, trademarks, and/or company names are used solely for identification and belong to their respective owners.

Optimize Your Application Performance

In the previous article, “Clean your OpenGL usage using gDEBugger,” we demonstrated how gDEBugger can help you verify that your application uses OpenGL correctly and calls the OpenGL API commands you expect it to call. This article will discuss the use of ATI and NVIDIA performance counters together with gDEBugger's Performance Views to locate graphics pipeline performance bottlenecks.

Graphics Pipeline Bottlenecks

The graphics system generates images through a pipelined sequence of operations. A pipeline runs only as fast as its slowest stage. The slowest stage is often called the pipeline bottleneck. A single graphics primitive (for example, a triangle) has a single graphic pipeline bottleneck. However, the bottleneck may change when rendering a graphics frame that contains multiple primitives. For example, if the application first renders a group of lines and afterwards a group of lit and shaded triangles, we can expect the bottleneck to change.

The OpenGL Pipeline

The OpenGL pipeline is an abstraction of the graphics system pipeline. It contains stages, executed one after the other. Such stages are:

- Application: the graphical application, executed on the CPU, calls OpenGL API functions.

- Driver: the graphics system driver runs on the CPU and translates OpenGL API calls into actions executed on either the CPU or the GPU.

- Geometric operations: the operations required to calculate vertex attributes and position within the rendered 2D image space. This includes: multiplying vertices by the model-view and projection matrices, calculating vertex lighting values, executing vertex shaders, etc.

- Raster operations: operations operating on fragments / screen pixels: reading and writing color components, reading and writing depth and stencil buffers, performing alpha blending, using textures, executing fragment shaders, etc.

- Frame buffer: a memory area holding the rendered 2D image.

Some of the pipeline stages are executed on the CPU; other stages are executed on the GPU. Most operations that are executed on top of the GPU are executed in parallel.

Remove Performance Bottlenecks

As mentioned in the “Graphics Pipeline Bottlenecks” section, the graphics system runs only as fast as its slowest pipeline stage, which is often called the pipeline bottleneck. The process for removing performance bottlenecks usually involves the following stages:

- Identify the bottleneck: Locate the pipeline stage that is the current graphic pipeline bottleneck.

- Optimize: Reduce the workload done in that pipeline stage until performance stops improving or until you have achieved the desired performance level.

- Repeat: Go back to stage 1.

Notice that after your performance optimizations are done, or after you have reached a bottleneck that you cannot optimize anymore, you can start adding workload to pipeline stages that are not fully utilized without affecting render performance. For example, use more accurate textures, perform more complicated vertex shader operations, etc.

gDEBugger Performance Graph View

gDEBugger Performance Graph view helps you locate your application's graphics pipeline performance bottlenecks; it displays, in real time, graphics system performance metrics. Viewing metrics that measure the workload done in each pipeline stage enables you to estimate the current performance pipeline bottleneck.

gDEBugger Performance Graph View helps you locate your application’s graphic pipeline performance bottlenecks. View Closeup

Such metrics are: CPU user mode and privilege mode utilizations, graphics driver idle, GPU idle, vertex shader utilization, fragment shader utilization, video memory usage, culled primitives counters, frames per seconds (per render context), number of OpenGL function calls per frame, total size of all loaded textures (in texels) and many other counters.

There is no need to make any changes to your source code or recompile your application. The performance counters will be displayed inside the Performance Graph view.

gDEBugger supports operating system performance counters (Windows and Linux), NVIDIA's performance counters via NVPerfKit, ATI's performance metrics and gDEBugger's internal performance counters. Other IHVs' counters will be supported in the future.

gDEBugger Performance Analysis Toolbar

![]() The Performance Analysis toolbar offers commands that enable you to pinpoint application performance bottlenecks by “turning off” graphics pipeline stages. If the performance metrics improve while “turning off” a certain stage, you have found a graphics pipeline bottleneck!

The Performance Analysis toolbar offers commands that enable you to pinpoint application performance bottlenecks by “turning off” graphics pipeline stages. If the performance metrics improve while “turning off” a certain stage, you have found a graphics pipeline bottleneck!

These commands include:

![]() - Eliminate Draw Commands: Identify CPU and bus performance bottlenecks by ignoring all OpenGL commands that push vertices or texture data into OpenGL. When ignoring these commands, the CPU and bus workloads remain unchanged, but the GPU workload is almost totally removed, since most GPU activities are triggered by input primitives (triangles, lines, etc).

- Eliminate Draw Commands: Identify CPU and bus performance bottlenecks by ignoring all OpenGL commands that push vertices or texture data into OpenGL. When ignoring these commands, the CPU and bus workloads remain unchanged, but the GPU workload is almost totally removed, since most GPU activities are triggered by input primitives (triangles, lines, etc).

![]() - Eliminate Raster operations: Identify raster operation bottlenecks by forcing OpenGL to use a 1x1 pixels view port. Raster operations operate per fragment or pixel. By setting a 1x1 pixel view port most raster operations will be eliminated.

- Eliminate Raster operations: Identify raster operation bottlenecks by forcing OpenGL to use a 1x1 pixels view port. Raster operations operate per fragment or pixel. By setting a 1x1 pixel view port most raster operations will be eliminated.

![]() - Eliminate Fixed Pipeline Lighting operations: Identify “fixed pipeline lighting” related calculation bottlenecks. This is done by turning off all OpenGL fixed pipeline lights. Notice that this command does not affect shaders that do not use the fixed pipeline lights.

- Eliminate Fixed Pipeline Lighting operations: Identify “fixed pipeline lighting” related calculation bottlenecks. This is done by turning off all OpenGL fixed pipeline lights. Notice that this command does not affect shaders that do not use the fixed pipeline lights.

![]() - Eliminate Textures Data Fetch operations: Identify texture memory performance bottlenecks by forcing OpenGL to use 2x2 pixel stub textures instead of the application defined textures. By using such small stub textures, the texture data fetch operation workload will be almost completely removed.

- Eliminate Textures Data Fetch operations: Identify texture memory performance bottlenecks by forcing OpenGL to use 2x2 pixel stub textures instead of the application defined textures. By using such small stub textures, the texture data fetch operation workload will be almost completely removed.

![]() - Eliminate Fragment Shader Operations: Identify fragment shader related bottlenecks by forcing OpenGL to use a very simple stub fragment shader instead of the application defined fragment shaders.

- Eliminate Fragment Shader Operations: Identify fragment shader related bottlenecks by forcing OpenGL to use a very simple stub fragment shader instead of the application defined fragment shaders.

The “Combined” Approach

Combining the Performance Analysis toolbar with the Performance Graph view gives an even stronger ability to locate performance bottlenecks. Viewing the way performance metrics vary when disabling graphics pipeline stages can give excellent hints for locating the graphics pipeline performance bottleneck.

For example, an application runs at 20 F/S and has 100% fragment shader utilization and 30% vertex shader utilization. When disabling fragment shader operations, the metrics change to 50 F/S, 2% fragment shader utilization and 90% vertex shader utilization. The “combined” approach tells us that the current bottleneck is probably the fragment shader operations. It also tells us that if we optimize and reduce the fragment shader operation workload, the next bottleneck that we will come across will probably be the vertex shader operations.

We hope this article will help you optimize the performance of your OpenGL based applications. In our next article we will talk about OpenGL's debugging model and show how gDEBugger can help you find those “hard to catch” OpenGL-related bugs.

Yaki Tebeka, Graphic Remedy

CTO & Cofounder

Editor's Note: You'll remember from our first edition that Graphic Remedy and the ARB have teamed up to make gDEBugger available free to non-commercial users for a limited time.

OpenGL and Windows Vista™

So Windows Vista is here, but what does it mean for an OpenGL user and developer? In this article we will try to give OpenGL application developers a quick peek at what to expect and the current state of OpenGL on Windows Vista.

Windows Vista supports two primary OpenGL implementations:

- Hardware manufacturers provide OpenGL ICD (installable client driver) with variable renderer string. The OpenGL version supported depends on the hardware manufacturer.

- Microsoft's software OpenGL 1.1 implementation (renderer string is GDI Generic), is clustered in higher numbered pixel formats.

Just like Windows XP, Windows Vista does not contain an OpenGL ICD "in the box." End users will need to install drivers from OEMs or video hardware manufacturers in order to access native hardware-accelerated OpenGL. These drivers can be found on the Web sites of most hardware manufacturers.

The two biggest changes that Windows Vista brings to OpenGL are:

- The new driver model, Windows Display Driver Model (WDDM), formerly known as Longhorn Display Driver Model (LDDM).

- The new Desktop Window Manager with its Desktop Compositing Engine provides 3D accelerated window composition when Windows Aero is turned on.

OpenGL and Direct3D are treated the same by Windows Vista, resulting in full integration into the OS for both APIs. For example, both Direct3D and OpenGL will get transparency and dynamic thumbnails when Windows Aero is on, and all the WDDM features (video memory virtualization, etc.) will work in a similar fashion.

Changes Introduced by the New Windows Display Driver Model

Under WDDM, Microsoft takes ownership of the virtualization of video resources at the video memory level, but also at the graphics engine level. In short, this means that multiple simultaneous graphics applications can be running in round robin as scheduled by Windows Vista's Video Scheduler and their working sets (video resources) will be paged in, as needed, by Windows Vista's Video Memory Manager.

Being that the video hardware is virtualized, user-mode components (the OpenGL ICD is one of those) no longer have direct access to that hardware, and need a kernel transition in order to program registers, submit command buffers, or know the real addresses of the video resources in memory.

Because Windows Vista controls the submission of graphic command buffers to the hardware, detecting hangs of the graphics chip due to invalid programming is now possible across the operating system. This is achieved via Windows Vista's Timeout Detection and Recovery (TDR). When a command buffer spends too long in the graphics chip (more than two seconds), the operating system assumes the chip is hung, kills all the graphics contexts, resets the graphics chip and recovers the graphics driver, in order to keep the operating system responsive. The user will then see a popup bubble notifying that the "Display driver stopped responding and has recovered."

Changes Introduced by the Desktop Window Manager

Graphics applications now have to share resources with the 3D-accelerated window manager. Each OpenGL window now requires an offscreen frontbuffer, because there's no longer direct access to the surface being displayed: the desktop. This is also true when the Desktop Windows Manager (DWM) is off.

In order for Windows Vista to perform compositing, DWM allocates an extra window-sized compositing buffer for each top-level window in the system. All these resources add up and increase the video memory footprint.

GDI is no longer hardware-accelerated, but instead rendered to system memory using the CPU. That rendering is later composed on a 3D surface in order to be shown on the desktop. The graphics hardware video driver is no longer involved in GDI rendering, which means that mixing GDI and accelerated 3D rendering in the same window is likely to produce corruption like stale or blanked 3D rendering, trails, etc. Using Microsoft's OpenGL software rendering (the first item in the four OpenGL implementations) will achieve GDI compatibility, but at the expense of rendering speed and lack of modern features.

Windows Vista running with Aero disabled. View Closeup

Windows Vista running with Aero enabled. Note the semi-transparent window decorations and the dynamic thumbnails representing the running applications. View Closeup

Windows Vista running with Aero enabled. Note the semi-transparent windows and the dynamic thumbnails representing the running applications.

What All This Means for the OpenGL ICD User

Software application companies are preparing new versions of their OpenGL applications to take advantage of the new features and fix the possible incompatibilities that Windows Vista may have introduced.

Meanwhile,

- Current Windows XP full screen OpenGL applications are likely to work, although applications that use GDI under the covers (e.g. taking screenshots using Alt+Print Screen, or some enhanced GDI mouse pointers) may not work.

- Carefully written windowed applications should also work. For those which make use of GDI and OpenGL a developer may find that the Desktop Window Manager is disabled when they launch with the message "The color scheme has been changed to Windows Vista Basic." The DWM will be turned on again when the application exits.

- For other windowed applications, if developers observe graphics corruption or lack of rendering refresh, developers may need to disable the DWM manually by switching to the "Windows Vista Basic" theme before starting the application. This also applies to an application's third-party plugins which require GDI interoperability without the application's knowledge. It is possible that some of them will cause corrupted rendering and will require developers to switch off DWM manually.

- Windowed applications that use frontbuffer rendering without ever calling glFlush or glFinish (as they should) are likely to appear completely black, because the rendering will sit forever in the offscreen frontbuffer. Not even switching the DWM off is likely to fix these, given that the offscreen frontbuffer is a requirement of the driver model itself.

- Windowed stereo rendering will not work.

- Simultaneously using graphics cards from multiple vendors will not work, given that Windows Vista only allows one WDDM driver to be loaded at the same time. Note that multi-card solutions from the same vendor (NVIDIA® SLI™ or AMD™ CrossFire™) should work.

- Memory consumption reduction schemes like Unified Depth/Backbuffer are not possible under the DWM, which increases the memory footprint of the application.

Will My Applications Run Fast?

Performance-wise, developers can expect a decrease of around 10-15% on Windows as compared to Windows XP. Applications that use problematic cases (for example, excessive flushing, or rendering to the frontbuffer, as explained later) can see a larger performance degradation. However, expect this gap to become smaller over time while the graphics hardware vendors work on further optimizing their Windows Vista WDDM drivers.

WDDM's increased memory footprint and new video memory manager approach may worsen resource-hungry scenarios. Applications which were already pushing the limits of memory consumption on Windows XP, just barely fitting, may fall off a performance cliff on Windows Vista. This is due to excessive thrashing because available system and/or video memory is now exhausted.

What All This Means for the OpenGL Developer

GDI compatibility notes

GDI usage over 3D accelerated regions is incompatible with Windows Aero, so developers have two options:

- Disable Windows Aero

- Do not use GDI on top of OpenGL rendering.

Windows Vista introduces the new pixelformat flag PFD_SUPPORT_COMPOSITION (defined in the Driver Development Kit's wingdi.h as 0x00008000). Creating an OpenGL context for a pixelformat without this flag will disable composition for the duration of the process which created the context. The flag is mutually exclusive with PFD_SUPPORT_GDI.

If a developer must use GDI on top of an OpenGL context, use the following rules:

- Create an OpenGL context using a pixelformat with GDI support (PFD_SUPPORT_GDI flag set). As this flag is mutually exclusive with PFD_SUPPORT_COMPOSITION, this will disable Aero for the lifetime of the current process.

- Don't use BeginPaint/EndPaint outside the WM_PAINT message handling.

- As on Windows XP, use the API synchronization calls whenever necessary: GdiFlush to synchronize GDI with OpenGL rendering and glFinish for the converse.

On the other hand, if a developer wants to have Windows Aero enabled with a windowed OpenGL application, use the following rules to verify that you are not inadvertently trying to mix GDI over OpenGL:

- Create an OpenGL context using a pixelformat with compositing support (PFD_SUPPORT_COMPOSITION set).

- Handle the application window's WM_ERASEBKGND by returning non-zero in the message handler (this will avoid GDI clearing the OpenGL windows' background).

- Verify that the OpenGL window has the proper clipping styles WS_CLIPCHILDREN or WS_CLIPSIBLINGS, so GDI rendering of sibling windows in the layout hierarchy is not painted over and vice versa.

- Repaint the application's windows as they are being resized rather than when the final resize happens. This will avoid interacting with GDI's xor drawing of the window border. For example, if the application has splitter bars in a four-viewport application, resize the viewports as the splitter bar is being dragged, otherwise GDI xor rendering over the OpenGL viewport will leave trails.

- Do not use GDI for xor drawing of "rubberbands" or selection highlighting over the OpenGL rendering. Use OpenGL logical operations instead.

- Do not get the desktop's DC and try to paint over it with GDI, as it will corrupt the 3D-accelerated regions.

- Under the DWM's new architecture it is especially important that an application developer verify that the application pairs GetDC/ReleaseDC appropriately. The same goes for LockWindowUpdate and LockWindowUpdate(NULL).

Performance notes and other recommended practices

If an application renders to the frontbuffer, remember to call glFinish or glFlush whenever it needs the contents to be made visible on the screen. For the same reason, do not call those two functions too frequently, as they will incur the penalty of copying the contents of the offscreen frontbuffer to the desktop.

Calling SwapBuffers on windowed applications incurs two extra copies. One from the backbuffer to the composition surface, and then one from the composition surface to the final desktop.

Calling synchronization routines like glFlush, glFinish, SwapBuffers, or glReadPixels (or any command buffer submission in general) now incurs a kernel transition, so use them wisely and sparingly.

Given that under WDDM the OpenGL ICD relinquishes control over the desktop, fullscreen mode is now achieved by the driver in a similar way to Direct3D's exclusive mode. For that reason do not try to use GDI features on a fullscreen application (e.g. large GDI cursors, doing readbacks via GetDC/BitBlt), as they refer to the desktop which resides in a completely different piece of memory than the 3D rendering.

If the application performs extremely GPU intensive and lengthy operations, for example rendering hundreds of fullscreen quads using a complex pixel shader all in a single glDrawElements call, in order to avoid exceeding the 2 second timeout and having an application being killed by Windows Vista's Timeout Detection and Recovery, split the call into chunks and call glFlush/glFinish between them. The driver may be able to split long chunks of work for the application, but there will always be corner cases it cannot control, so don't rely solely on the driver to keep rendering from exceeding the two second limit. Instead, anticipate these cases in your application and consider throttling the most intense rendering loads yourself.

Under Windows Vista, the notion of "available video memory" has even less significance than under Windows XP, given that first it is hard for the application to account for the extra footprint needed by the new driver model, and second, the video memory manager may make more memory available to an application on an as-needed-basis.

If your application handles huge datasets, you may find it competing for virtual address space with the video memory manager. In those cases it is recommended that developers move an application to 64-bit or, if not possible, compile them with the /LARGEADDRESSAWARE flag and either use a 64-bit OS (which results in 4GB of user address space per process) or boot the 32-bit OS with the /3GB flag (which results in 3GB of user address space per process).

Neither of these two solutions is completely trouble-free:

- Compiling for 64-bit has several caveats (e.g. sign extension, extra memory consumption due to larger pointers).

- Compiling /LARGEADDRESSAWARE may break applications that assume the high bit of user space addresses will be clear.

- When using /3GB a developer may also need to tune the /userva boot parameter to prevent the kernel from running out of page table entries.

Fun with Windows. View Closeup

Additional References

- DWM interaction with graphics APIs

http://blogs.msdn.com/greg_schechter/archive/2006/05/02/588934.aspx - WDDM

http://msdn2.microsoft.com/en-us/library/aa973510.aspx - TDR

http://www.microsoft.com/whdc/device/display/wddm_timeout.mspx - /3GB and /userva

http://msdn2.microsoft.com/en-us/library/ms791558.aspx - /LARGEADDRESSAWARE

http://blogs.msdn.com/oldnewthing/archive/2004/08/12/213468.aspx

http://support.microsoft.com/default.aspx?scid=889654 - 64-bit migration tips

http://msdn2.microsoft.com/en-us/library/aa384214.aspx

Antonio Tejada, NVIDIA

GLSL: Center or Centroid? (Or When Shaders Attack!)

Figure 1 - Correct (on left) versus Incorrect (on right). Note the yellow on the left edge of the “Incorrect” picture. Even though myMixer varies between 0.0-1.0, somehow myMixer is outside that range on the “Incorrect” picture. View Closeup

Let's take a look at a simple fragment shader but with a simple non-linear twist:

varying float myMixer;