I implemented a sphere with a UV cubemap. I have an algorithm that generates a cubemap sphere triangle-based mesh. The algorithm takes a parameter that drives the level of subdivision of the sphere (so I can determine the level of detail I want depending how far away the POV is).

I also implemented a straight UV mapping from the sphere coordinates to a 6-face cross-shaped texture.

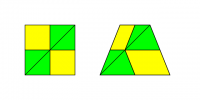

Basically I am creating a cube first, with regular, uniform subdivision, then I project the coordinates onto a “normal” sphere (basically I just normalize the vertices that make the triangles that make up the cube). The cube face coordinates I use to build up the UV coordinates.

As long as the texture is matching the size and proportions that the UV mapper is expecting, I can re-use this sphere over and over again with as many textures I want.

All good and dandy.

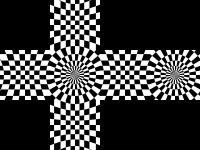

Everything works fine. Of course I am facing the issue that the textures get “warped”. They get “fish-eyed” towards the center of the face and compressed at the corners. Again … no surprises there.

What I would like to know is this: is there an algorithm that would allow me to “pre-warp” the UV coordinates, so that when the texture is sampled when it needs to render it cancels out the warping? and makes the texture looks somewhat “normal”?

People I have seen, get around by pre-warping the texture itself. However, if possible, I’d like to not have to bother with pre-warping the texture and handle it with an algorithm while I am generating the UV coordinates …

I am not sure if that’s possible. I did not find anything viable by searching … so I thought I would ask.

I have a sneaking suspicion that what I want to do is not entirely possible … I tried playing with trigonometry and vector math but I couldn’t come up with something viable … maybe my college math is a bit rusty.

Thank you!!