I have a medium-size scene which drains the performance far more than it should:

Lighting and shadows are disabled, all it’s doing is rendering the meshes, nothing more.

Here’s some debug info for that particular scene:

Triangles: 162123

Vertices: 213934

Shader Changes: 5

Material Changes: 229

Meshes: 12281 (99.58% static) (static = Everything which doesn't have unusual vertex attributes (e.g. vertex weights for animated meshes))

Average number of triangles per mesh: 13

Render Duration (GPU): 134.107ms (~7.46 frames per second)

My rendering pipeline currently looks like this (pseudocode):

startGPUTimer()

glBindVertexArray(vao);

foreach shader

glUseProgram(shader)

foreach material

glBindTexture(material)

foreach mesh

glDrawElementsBaseVertex(GL_TRIANGLES,numElements,GL_UNSIGNED_INT,offsetToIndices,vertexOffset);

end

end

end

endGPUTimer()

There are two vertex data buffers. Buffer #1 contains the vertex, uv and normal data for all meshes, buffer #2 contains the indices. Both buffers are bound via the vertex array object(vao), only ONCE every frame.

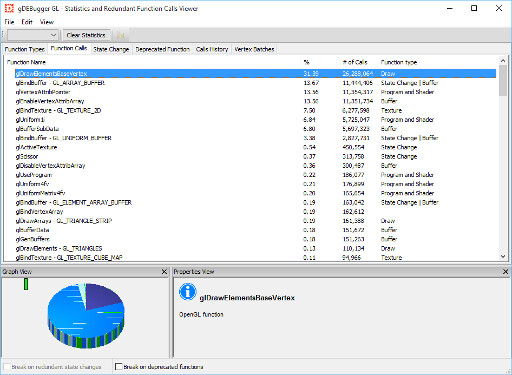

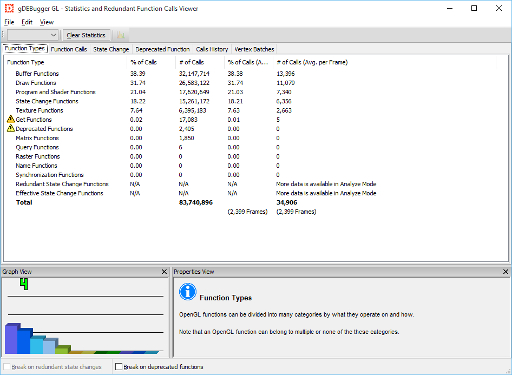

The biggest impact stems from glDrawElementsBaseVertex, which is called once for every mesh (12281 times in this case). Here’s the result of a profiling of the scene using gDEBugger:

My problem is, I simply don’t know what to do to optimize it. I’m already doing frustum culling. Occlusion queries wouldn’t help, considering almost nothing is obstructed and most meshes are very small.

I also can’t use instancing because there’s only very few meshes that are exactly the same (I’m already using LODs for the trees, but the amount of triangles isn’t the issue here).

I know that 12281 draw calls are a lot, but it seems to me that it shouldn’t be nearly enough to cause such a dent in performance? I know for a fact that the shader and the actual rendering are not at fault.

I’d like to keep everything as dynamic as possible, so no texture atlases, binary space partitioning, etc.

What are my options?